Practical XAI: Implementing Explainable AI for Real-World Business

Introduction

Artificial intelligence is no longer a futuristic concept; it’s a powerful engine driving decisions in boardrooms, hospitals, and financial markets. Yet, for all its power, AI often operates inside a “black box.” We see the inputs and the outputs, but the complex, multi-layered decision-making process remains a mystery. This opacity isn’t just a technical curiosity—it’s a significant business risk, creating a barrier to trust, accountability, and adoption.

This is where Explainable AI (XAI) comes in. XAI is a set of processes and methods that allows human users to comprehend and trust the results and output created by machine learning algorithms. It’s about cracking open that black box and providing clear, understandable AI decision making explanation.

In this comprehensive guide, we’ll move beyond the academic theory and dive into practical XAI. You’ll learn not just what XAI is, but how to implement it within your organization. We will explore interpretable machine learning techniques, essential XAI tools for business, and a step-by-step framework for building trustworthy AI systems that are compliant, ethical, and ultimately, more effective.

Why ‘Explainable’ is the New Essential for Enterprise AI

For years, the primary metric for AI success was performance. How accurate is the model? How much did it improve efficiency? But as AI becomes more integrated into high-stakes environments, from medical diagnoses to billion-dollar financial trades, performance alone is not enough. The demand for AI transparency solutions has grown from a niche concern to a C-suite imperative. Here’s why enterprise XAI strategies are no longer optional.

Building Foundational Trust

Trust is the currency of business. Customers, employees, and stakeholders are increasingly wary of decisions made by opaque algorithms. When a customer is denied a loan or offered a specific product, they deserve to know why. XAI provides that clarity, fostering trust and improving user adoption. It transforms a skeptical user into an informed partner, confident in the technology they are using.

Navigating the Maze of Regulatory Compliance

Regulators are catching up to technology. With mandates like the EU’s Artificial Intelligence Act and principles within GDPR that touch on automated decision-making, the legal and financial risks of non-compliance are soaring. Regulatory compliance AI requires organizations to produce auditable AI models. If a regulator asks why your AI system denied service to a protected group, “we don’t know, the algorithm decided” is not a valid defense. XAI provides the documentation and rationale needed to demonstrate fairness and compliance.

Mitigating Critical Business Risks

A black-box model can hide serious flaws. It might latch onto spurious correlations in the data, leading to biased or nonsensical outcomes. These errors can result in significant financial losses, reputational damage, and legal battles. By understanding why a model makes its predictions, teams can identify and correct these flaws before they impact the business, ensuring robust and reliable performance. This is a cornerstone of AI accountability.

Enhancing Model Performance and Debugging

Explainability isn’t just about external transparency; it’s a powerful tool for your data science team. When a model underperforms, XAI techniques can pinpoint the problematic features or data points causing the issue. This insight accelerates the debugging process, leading to faster development cycles and more accurate, robust models. It’s about working smarter, not just harder, by understanding AI decisions at a granular level.

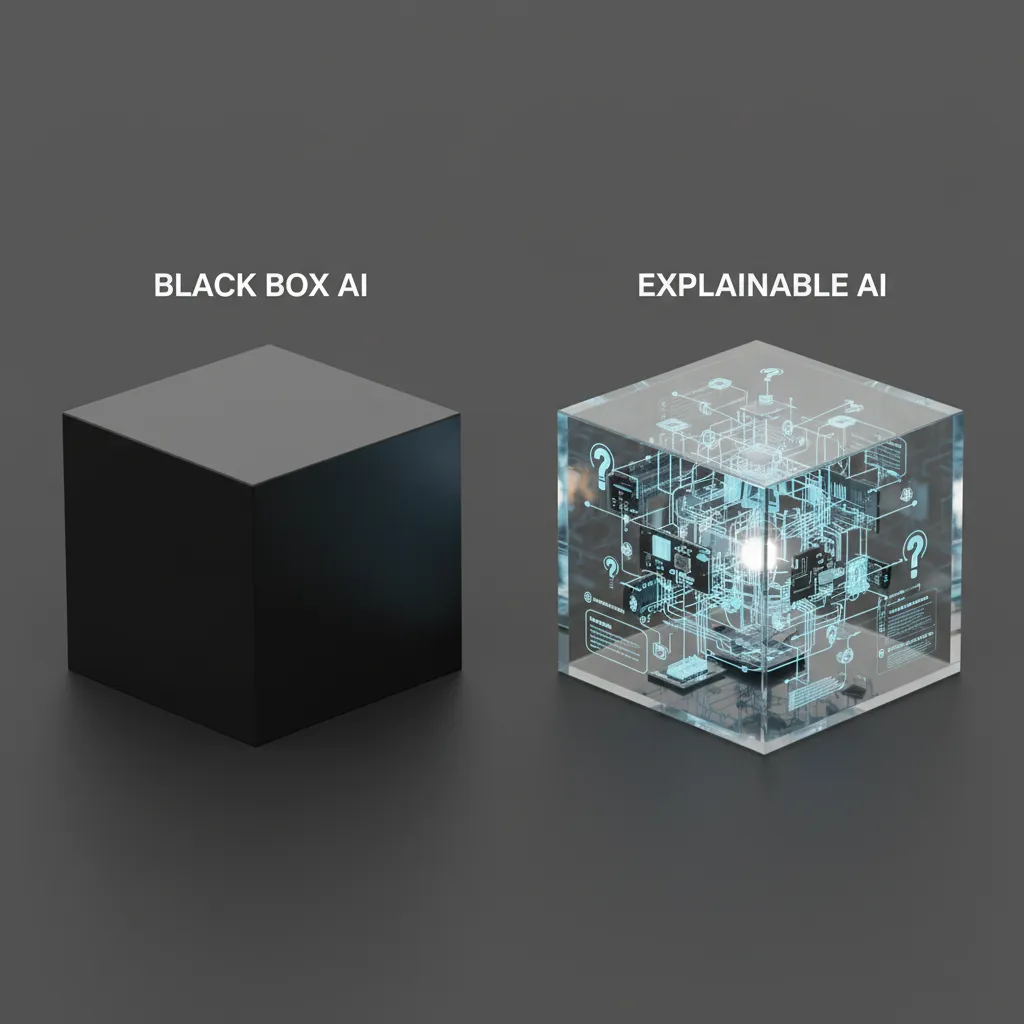

The Explainability Spectrum: From White-Box Simplicity to Black-Box Complexity

Not all AI models are created equal when it comes to transparency. Understanding the explainability spectrum is crucial for choosing the right approach for your specific business need. It’s a constant trade-off between a model’s predictive power and its inherent interpretability.

H3: White-Box Models: Inherently Transparent

White-box AI models (or glass-box models) are inherently interpretable. Their internal logic is straightforward enough for an expert to inspect and understand. Think of them as simple machines with visible gears.

- Examples: Linear Regression, Logistic Regression, Decision Trees.

- Pros: Easy to explain, computationally efficient, and their decision-making process is fully transparent. Perfect for situations where explainability is a legal or ethical requirement.

- Cons: They often lack the predictive accuracy to handle highly complex, non-linear data patterns, such as those found in image recognition or natural language processing.

H3: Black-Box Models: The Power and the Problem

Black-box models are the powerhouses of modern AI, capable of achieving state-of-the-art performance on incredibly complex tasks. However, their internal workings, often involving millions or billions of parameters, are virtually impossible for a human to decipher directly.

- Examples: Deep Neural Networks (DNNs), Gradient Boosting Machines (GBMs), Random Forests.

- Pros: Unmatched predictive accuracy for complex, high-dimensional data.

- Cons: Their opacity makes them difficult to trust, debug, and audit. This is the core problem that black-box AI explanation techniques aim to solve.

For most modern business challenges, the high performance of black-box models is necessary. Therefore, the focus of practical XAI is not on avoiding these models, but on developing methods to shed light on their decision-making processes after the fact. This is where post-hoc interpretability becomes essential.

Unlocking the Black Box: Key XAI Techniques and Tools

Since we can’t simply read the mind of a neural network, data scientists have developed clever techniques to probe and question these models, forcing them to reveal their logic. These machine learning interpretability methods are the core of practical XAI.

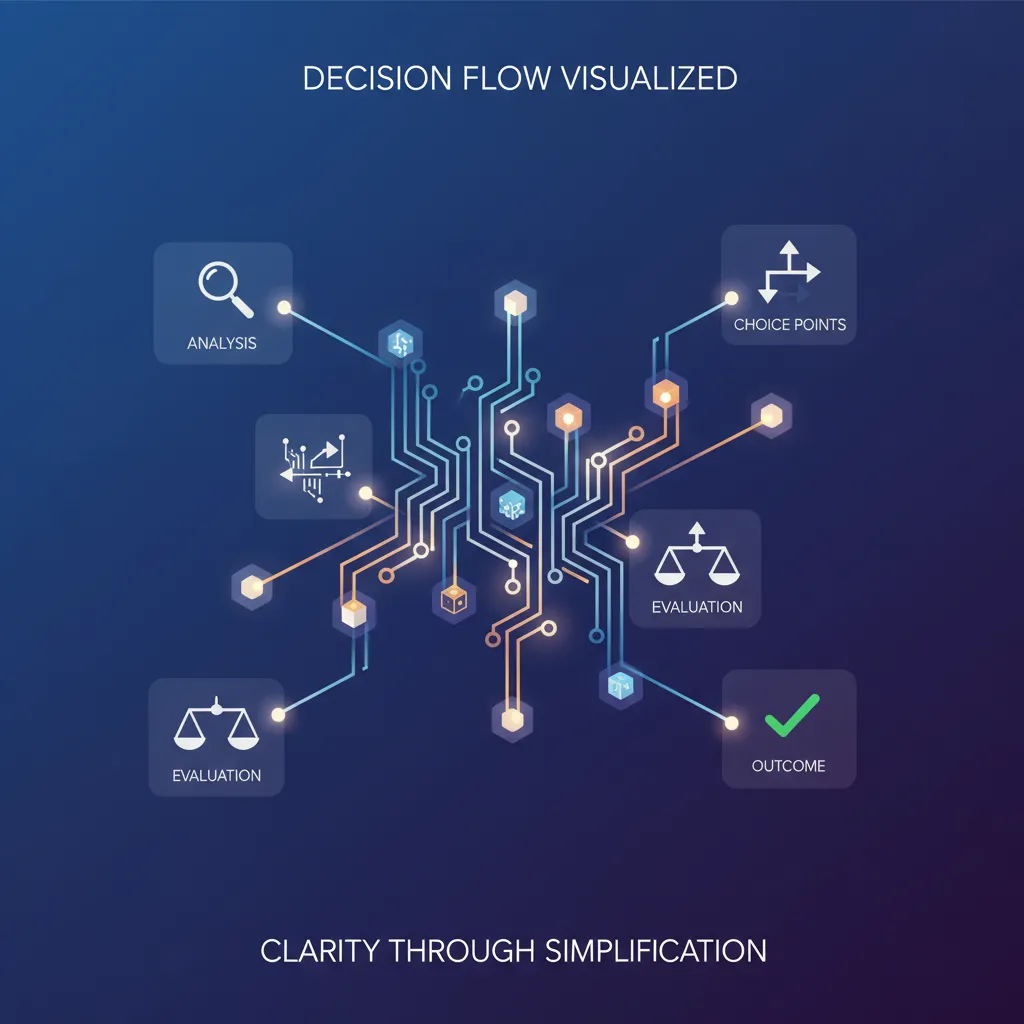

Local vs. Global Explanations: Understanding the Scope

Before diving into specific techniques, it’s important to understand two levels of explanation:

- Global Explanations: These describe the model’s overall behavior. They answer questions like, “What are the most important features driving predictions across the board?”

- Local Explanations: These focus on a single prediction. They answer, “Why was this specific customer’s loan application denied?” or “Why was this particular image classified as a cat?”

Post-Hoc Interpretability for Complex Models

Post-hoc techniques are applied after a model has been trained. They are model-agnostic, meaning they can be used on any black-box model, making them incredibly versatile. The two most popular methods are LIME and SHAP.

LIME (Local Interpretable Model-agnostic Explanations)

Imagine trying to understand a complex, curved line at a single point. You could draw a straight, simple line that approximates the curve just in that tiny area. That’s essentially what LIME does. For a single prediction, it creates a simple, interpretable model (like linear regression) that mimics the behavior of the complex black-box model in that local vicinity. This provides a clear, intuitive explanation for an individual outcome.

SHAP (SHapley Additive exPlanations)

SHAP is a more sophisticated approach rooted in cooperative game theory. It explains a prediction by calculating the contribution of each feature to that prediction. Think of it as fairly distributing the “payout” (the prediction) among the “players” (the features). SHAP values tell you not only which features were important but also how they pushed the prediction higher or lower. A key advantage of SHAP is that it can provide both detailed local explanations and robust global explanations, making it one of the most powerful LIME SHAP explanations tools.

Popular XAI Tools for Business

Implementing these techniques from scratch can be complex. Fortunately, a rich ecosystem of open-source libraries and enterprise platforms has emerged to simplify Explainable AI implementation.

- Open-Source Libraries:

- SHAP: The go-to Python library for implementing SHAP values. It’s highly versatile and integrates well with popular ML frameworks like Scikit-learn, PyTorch, and TensorFlow.

- LIME: The original Python library for the LIME algorithm, excellent for generating intuitive local explanations.

- InterpretML: A Microsoft-backed open-source package that includes various state-of-the-art explainability algorithms and tools for generating interactive dashboards.

- Enterprise Platforms:

- Google Cloud AI Explanations: Integrated directly into the Google Cloud Platform, providing feature attributions for models deployed on AI Platform.

- IBM Watson OpenScale: A comprehensive platform for AI lifecycle management that includes robust model monitoring and explainability features to detect and mitigate bias and drift.

- Fiddler AI: An enterprise-focused Model Performance Management (MPM) platform that offers powerful explainability and model monitoring capabilities.

A Practical Framework: Your 5-Step Enterprise XAI Strategy

Moving from concept to execution requires a structured approach. An effective enterprise XAI strategy integrates explainability into the entire machine learning lifecycle, ensuring it’s a core component, not an afterthought.

Step 1: Define Your Explainability Goals

Start with “why.” What do you need to explain, and to whom? The explanation for a data scientist debugging a model will be vastly different from the one provided to a customer or a compliance officer.

- For Regulators: Focus on fairness, bias detection, and auditable decision trails.

- For Customers: Emphasize transparency and clear, simple reasons for outcomes.

- For Developers: Provide detailed feature importance and model behavior insights for debugging.

- For Executives: Offer high-level summaries linking model behavior to business KPIs.

Step 2: Choose the Right Models and Techniques

Based on your goals, decide on the appropriate model and XAI technique. Is the inherent transparency of a white-box AI model sufficient? Or do you need the power of a black-box model coupled with post-hoc interpretability tools like SHAP? This is a critical decision in building transparent AI that balances performance with clarity.

Step 3: Integrate XAI into the ML Development Lifecycle

Explainability should not be a final step performed only after a model is in production. It should be a continuous process.

- During Data Analysis: Use XAI to understand feature relationships and potential biases in the training data.

- During Model Training: Compare the explanations of different models to select the one that is not only accurate but also logical.

- During Model Validation: Ensure the model’s reasoning aligns with domain knowledge.

- During Production Monitoring: Continuously monitor explanations to detect data drift or concept drift, where the model’s behavior changes over time.

Step 4: Implement Human-Centered Explanations

The raw output of an XAI algorithm is often a complex set of numbers and graphs. The final, crucial step is translating this data into a meaningful, context-aware narrative for the intended audience. This is the essence of human-centered AI. A loan officer doesn’t need a SHAP plot; they need a clear sentence like, “The application was flagged due to a high debt-to-income ratio and a short credit history.” Related: AI-Powered Inclusive Design: Crafting Experiences for Everyone.

Step 5: Establish Governance and Accountability

Finally, establish clear ownership and ethical AI frameworks. Who is responsible for reviewing and acting on XAI insights? What is the protocol if an explanation reveals unfair bias? An AI ethics in practice committee or review board can provide oversight, ensuring that explainability leads to real accountability and continuous improvement.

XAI in Action: Real-World Use Cases and Applications

The benefits of XAI are not theoretical. Across industries, companies are leveraging explainability to create better, safer, and more valuable AI systems. Here are a few key XAI practical applications.

XAI in Finance: Beyond the Credit Score

The financial services industry is built on trust and regulation, making it a prime candidate for XAI.

- Loan Decisions: Providing clear reasons for loan application approvals or denials to both customers and regulators.

- Fraud Detection: Allowing investigators to understand why a transaction was flagged as fraudulent, reducing false positives and improving accuracy.

- Algorithmic Trading: Helping firms understand and audit the behavior of high-frequency trading bots to ensure they operate within risk parameters. Related: AI Trading Bots: Your Guide to Automated Investing.

XAI in Healthcare: Trusting the Diagnosis

In healthcare, where decisions can be life-or-death, explainability is a moral and clinical necessity.

- Medical Imaging: Highlighting the specific pixels in an MRI or X-ray that led an AI to detect a tumor, allowing radiologists to verify the finding.

- Patient Risk Stratification: Explaining why a patient has been identified as high-risk for a certain condition, enabling proactive and personalized care.

- Drug Discovery: Understanding which molecular features a model is using to predict the efficacy of a drug candidate, speeding up research and development. Related: The AI Health Revolution: Smart Tracking & Proactive Wellness.

XAI in E-commerce and Marketing

XAI helps businesses understand their customers better and optimize their strategies.

- Recommendation Engines: Explaining why a specific product was recommended to a user (“Because you bought X and showed interest in Y”).

- Customer Churn Prediction: Identifying the key factors that lead a customer to leave, allowing for targeted retention efforts.

- Ad Targeting: Ensuring that ad algorithms are not using sensitive or protected attributes for targeting, avoiding discriminatory practices.

The Future of Explainable AI: Trends and What’s Next

The field of XAI is evolving rapidly. As we look ahead, several trends are shaping the future of explainable AI.

- Causal AI: Moving beyond correlation to causation. Future XAI will not just show which features were important, but will help us understand the causal relationships driving outcomes, enabling more effective interventions.

- Automated Explainability: New tools will automate much of the process of generating and translating explanations, making XAI more accessible to non-experts.

- Enhanced Focus on Data Privacy: Techniques are being developed that can provide explanations without exposing sensitive underlying personal data, balancing transparency with data privacy AI. Related: Decentralized Science (DeSci): Can Blockchain and AI Revolutionize Research?.

- Standardization and Regulation: Expect more industry standards and government regulations codifying the requirements for AI explainability, making it a non-negotiable aspect of AI development.

Conclusion

The era of accepting AI decisions on blind faith is over. In today’s business landscape, transparency is not a feature; it’s the foundation of trust, compliance, and long-term success. Implementing Explainable AI is a journey that transforms artificial intelligence from a powerful but opaque tool into a transparent and trustworthy partner.

By adopting XAI development best practices, leveraging tools like LIME and SHAP, and building a robust enterprise XAI strategy, your organization can demystify its AI systems. You can mitigate risk, meet regulatory demands, and build deeper trust with your customers and stakeholders. The path to building transparent AI is clear. It’s time to open the black box and unlock the full, responsible potential of your AI investments.

Frequently Asked Questions (FAQs)

Q1. What is explainable AI (XAI) in simple terms?

Explainable AI (XAI) refers to methods and technologies that make the decision-making process of an artificial intelligence system understandable to humans. Instead of a “black box” where you only see the input and output, XAI provides insights into how the AI reached a specific conclusion, increasing transparency and trust.

Q2. What is a real-world example of explainable AI?

In finance, if an AI model denies a person’s loan application, an XAI system can provide the specific reasons. For instance, it might state the denial was based on a combination of a high debt-to-income ratio, a low credit score, and a short employment history, making the decision transparent and auditable.

Q3. What are the three core principles of XAI?

The three core principles of XAI are generally considered to be:

- Transparency: The ability to understand the mechanics of the model.

- Interpretability: The ability to explain a model’s prediction in human-understandable terms.

- Explainability: The ability to provide a clear, tailored explanation for a specific audience about how a decision was made.

Q4. Why is implementing XAI so challenging?

XAI is challenging due to the inherent trade-off between a model’s performance and its interpretability. The most accurate models, like deep neural networks, are often the most complex and opaque. Implementing XAI requires specialized expertise, additional computational resources, and a thoughtful strategy to translate technical outputs into meaningful business insights.

Q5. What is the difference between LIME and SHAP?

LIME (Local Interpretable Model-agnostic Explanations) explains a single prediction by creating a simple, local approximation of the model around that prediction. SHAP (SHapley Additive exPlanations) is based on game theory and calculates the precise contribution of each feature to the prediction’s outcome. While both provide local explanations, SHAP is often considered more robust and can also provide accurate global feature importance.

Q6. Can any AI model be made explainable?

Yes, in principle. Post-hoc XAI techniques like LIME and SHAP are “model-agnostic,” meaning they can be applied to virtually any trained model, including complex black-box systems like neural networks. The quality and type of explanation may vary, but these tools provide a universal approach to interpreting AI decisions.